Yeah, these are popping up all over the place - all from different users with newly created accounts and no other post/comment history. Most definitively sus.

geekwithsoul

I coalesce the vapors of human experience into a viable and meaningful comprehension.…

- 0 Posts

- 30 Comments

11·5 months ago

11·5 months agoIn addition to the point about Western mythologies dominating because of cultural exports, I think there is also the undercurrent of England’s original mythologies having been “lost” and so the English were always fascinated by the mythologies of the Norse (due to being invaded) and by the Greeks and Romans (as previous “great” civilizations they aspired to be).

Combine that with America’s obvious English influences and the influence of England as a colonizer around the world, and those mythologies gained a huge outsized influence.

1·6 months ago

1·6 months agoI probably didn’t explain well enough. Consuming media (books, TV, film, online content, and video games) is predominantly a passive experience. Obviously video games less so, but all in all, they only “adapt” within the guardrails of gameplay. These AI chatbots however are different in their very formlessness - they’re only programmed to maintain engagement and rely on the LLM training to maintain an illusion of “realness”. And because they were trained on all sorts of human interactions, they’re very good at that.

Humans are unique in how we continually anthropomorphize tons of not only inert, lifeless things (think of someone alternating between swearing at and pleading to a car that won’t start) but abstract ideals (even scientists often speak of evolution “choosing” specific traits). Given all of that, I don’t think it’s unreasonable to be worried about a teen with a still developing prefrontal cortex and who is in the midst of working on understanding social dynamics and peer relationships to embue an AI chatbot with far more “humanity” than is warranted. Humans seem to have an anthropomorphic bias in how we relate to the world - we are the primary yardstick we use to measure and relate everything around us, and things like AI chatbots exploit that to maximum effect. Hell, the whole reason the site mentioned in the article exists is that this approach is extraordinarily effective.

So while I understand that on a cursory look, someone objecting to it comes across as a sad example of yet another moral panic, I truly believe this is different. For one, we’ve never had access to such a lively psychological mirror before and it’s untested waters; and two, this isn’t some objection on some imagined slight against a “moral authority” but based in the scientific understanding of specifically teen brains and their demonstrated fragility in certain areas while still under development.

3·6 months ago

3·6 months agoSame! And if anyone disagrees, feel free to get in the comments! 😉

51·6 months ago

51·6 months agoI understand what you mean about the comparison between AI chatbots and video games (or whatever the moral panic du jour is), but I think they’re very much not the same. To a young teen, no matter how “immersive” the game is, it’s still just a game. They may rage against other players, they may become obsessed with playing, but as I said they’re still going to see it as a game.

An AI chatbot who is a troubled teen’s “best friend” is different and no matter how many warnings are slapped on the interface, it’s going to feel much more “real” to that kid than any game. They’re going to unload every ounce of angst into that thing, and by defaulting to “keep them engaged”, that chatbot is either going to ignore stuff it shouldn’t or encourage them in ways that it shouldn’t. It’s obvious there’s no real guardrails in this instance, as if he was talking about being suicidal, some red flags should’ve popped up.

Yes the parents shouldn’t have allowed him such unfettered access, yes they shouldn’t have had a loaded gun that he had access to, but a simple “This is all for funsies” warning on the interface isn’t enough to stop this from happening again. Some really troubled adults are using these things as defacto therapists and that’s bad too. But I’d be happier if lawmakers were much more worried about kids having access to this stuff than accessing “adult sites”.

That’s certainly where the term originated, but usage has expanded. I’m actually fine with it, as the original idea was about the pattern recognition we use when looking at faces, and I think there’s similar mechanisms for matching other “known” patterns we see. Probably with some sliding scale of emotional response on how well known the pattern is.

74·6 months ago

74·6 months agoMaybe? But in the article he was talking about his priority being that he wanted to disconnect from his phone but still wanted news. Just seems there’s been a solution for that for a few centuries now. His solution seemed to me at least to be a lemon that wasn’t worth the squeeze as it were.

44·6 months ago

44·6 months agoSo, a newspaper with a lot of extra steps? I understand the gee whizness of getting this all to work but not really sure there’s a solid “why” to this.

5·7 months ago

5·7 months agoYou know, I think a good rule of thumb is if someone finds humiliation “funny”, they’re generally kind of an asshole. Even if everyone is in on the “joke”, it’s revealing of a certain kind of mindset.

164·7 months ago

164·7 months agoIt’s more the fact they were running a mole in the CIA. If you want an example of more direct action, how does this one work for you (from just this week):

https://www.theregister.com/AMP/2024/09/25/chinas_salt_typhoon_cyber_spies/

In a related security advisory, government agencies accused the Flax Typhoon crew of amassing a SQL database containing details of 1.2 million records on compromised and hijacked devices that they had either previously used or were currently using for the botnet

Back in February, the US government confirmed that this same Chinese crew comprised"multiple" US critical infrastructure orgs’ IT networks in America in preparation for “disruptive or destructive cyberattacks” against those targets.

193·7 months ago

193·7 months agoI mean, the subject of “Chinese Espionage in the United States” has a fairly lengthy page all of its own on Wikipedia with examples and concerns. https://en.m.wikipedia.org/wiki/Chinese_espionage_in_the_United_States

Probably one of the most notable examples:

Between 2010 and 2012, intelligence breaches led to Chinese authorities dismantling CIA intelligence networks in the country, killing and arresting a large number of CIA assets within China.[43] A joint CIA/FBI counterintelligence operation, codenamed “Honey Bear”, was unable to definitively determine the source of the compromises, though theories include the existence of a mole, cyber-espionage, compromise of Hillary Clinton’s illicit classified email server as noted by the intelligence community inspector general,[44] or poor tradecraft.[43]Mark Kelton, then the deputy director of the National Clandestine Service for Counterintelligence, was initially skeptical that a mole was to blame.[43]

In January 2018, a former CIA officer named Jerry Chun Shing Lee[note 1] was arrested at John F. Kennedy International Airport, on suspicion of helping dismantle the CIA’s network of informants in China.[47][48]

And that’s just one we all know about. Not to mention a rich history of Chinese state-sponsored corporate espionage and a history of let’s say playing fast and loose with international norms, human rights, etc.

“…insinuating that only bots are down voting…” (emphasis added)

No, they’re not saying “only”, they’re saying there may be bots doing some of the downvoting. E.g. probably based off IPs, client strings, or something else.

That’s not what he was saying.

Yeah, go see his response in another part of this thread to my request to provide some constructive feedback if you haven’t already. It was all just the same bad faith misinformation about the bot and the data source. I even linked the sources for how it actually worked and no reply, just downvotes.

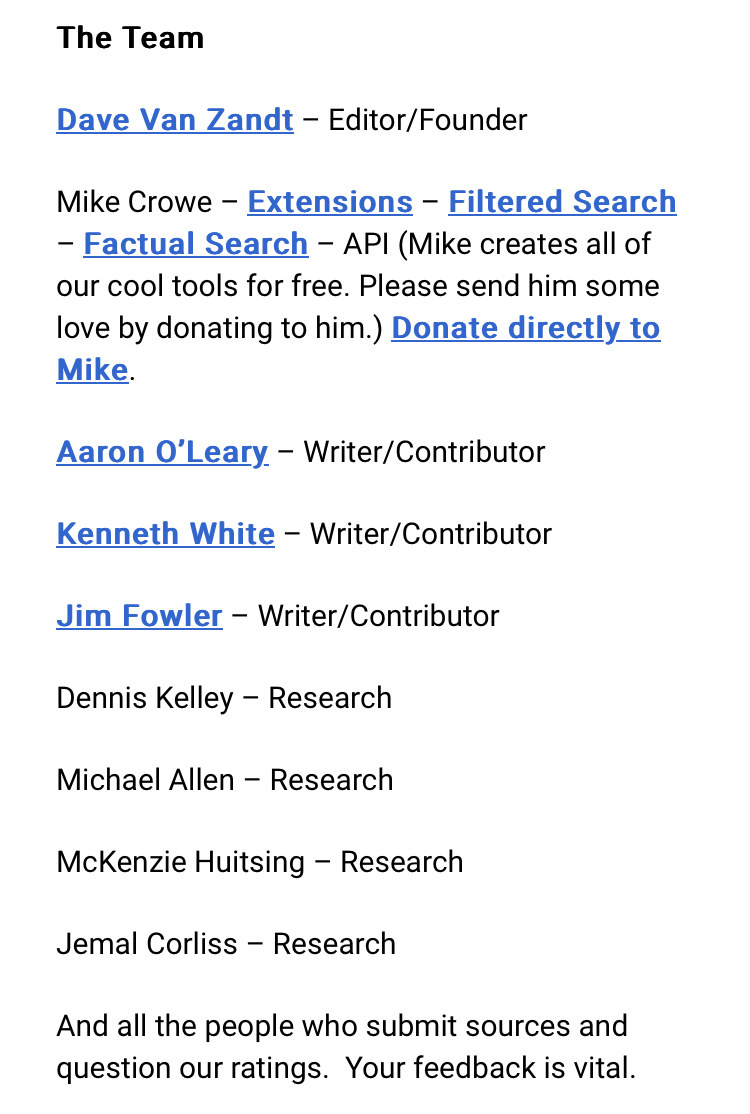

Currently the bot’s media ratings come from just some guy, who is unaccountable and has an obvious rightwing bias.

Wow! Talk about misinformation!!! https://mediabiasfactcheck.com/about/

Or maybe you think they were bought and paid for by some nefarious source? Nope…

Media Bias/Fact Check funding comes from reader donations, third-party advertising, and membership subscriptions. We use third-party advertising to prevent influence and bias, as we do not select the ads you see displayed. Ads are generated based on your search history, cookies, and the current web page content you are viewing. We receive $0 from corporations, foundations, organizations, wealthy investors, or advocacy groups. See details on funding.

…I would suggest making the ratings instead come from an open sourced and crowdsourced system. A system where everyone could give their inputs and have transparency, similar to an upvote/downvote system.

Such a system would take many hours to design and maintain, it is not something I personally am willing to contribute, nor would I ask it of any volunteers.

Thank you for at least providing an iota of something constructive. It’s an interesting idea, and there is academic research that shows it might be possible. But the problem is then in a world already filled with state- and corpo-sponsored organized misinformation campaigns, how does any crowdsourced solution avoid capture and infiltration from the very sources of misinformation it should be assessing? Look at the feature on Twitter and how often that is abused. Then you’d need a fact checker for your fact checker.

“universally destructive to understanding”

So what you’re saying is that no one derives any use from the bot? Wow, with that kind of omniscience, I’d expect we could just ask you to judge every news source. Win-win for everyone I suppose if you’re up for it.

Now “generally destructive” would probably be better wording for us mere mortals, but stills seems to be a wildly generalized statement. Or maybe “inadequately precise” would be more realistic, but then that really takes the wind out of the sails to ban it, doesn’t?

Because this is the first thing I think I’ve seen you post and blocking everything you disagree with seems sort of stupid?

I think the bot has issues, but I hardly agree that it’s posting misinformation. Incomplete? Imperfect? You bet. But that’s not “misinformation” in any commonly understood meaning. I think the intent of providing additional context on information sources is laudable.

As someone with such a distaste for misinformation, how would you suggest fixing it? That’s a much more useful discussion than “BAN THE THING I PERSONALLY AND SUBJECTIVELY THINK IS BAD!!!” You obviously think misinformation is a problem, so why not suggest a solution?

Just block it if you don’t like it?

4·7 months ago

4·7 months agoFor various reasons, I’ve gone to political rallies for a good portion of my life. They can be really fun or incredibly boring. There’s a sense of community you get at a concert or church service, and at the good ones, it can be quite a charge to be sharing the moment with others. At the bad ones, it can seem shrill or too amped or on the opposite side being dull as dishwater.

tl;dr - basically like a school pep rally for adults, but without the drawback of being required

Black gospel music has often been the only Christian-oriented music that really did it for me. A lot of blues, soul, etc was created by folks who got their start in black gospel music, and used what they learned there in secular genres. Hugely influential in so many different types of music.